ComfyUI is a versatile backend, but it has some drawbacks when trying to use it as a replacement for automatic1111 style backends. For example, when trying to use ComfyUI with SIllyTavern, it loses the ability to specify loras in the text prompt. In fact, by default, you won’t be able to use loras at all unless you hardcode them into your workflow imported into SillyTavern. If we were using Automatic1111, we could just do something like: “/sd prompt details <lora:lora-file.safetensors:2> lora-keyword” which would activate the respective lora. Unfortunately, that’s not how lora’s are handled by default in ComfyUI API. Fortunately with a few custom nodes and a custom SillyTavern workflow json, we can emulate the automatic1111 style variable lora calling from within the prompt, which makes lora usage in SillyTavern much more accessible.

You will want to install the following nodes:

- ComfyUI Cozines – https://github.com/skfoo/ComfyUI-Coziness

- ComfyUI Inspire Pack – https://github.com/ltdrdata/ComfyUI-Inspire-Pack

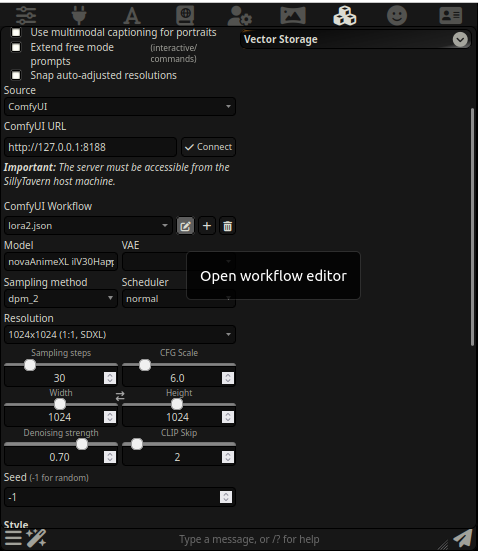

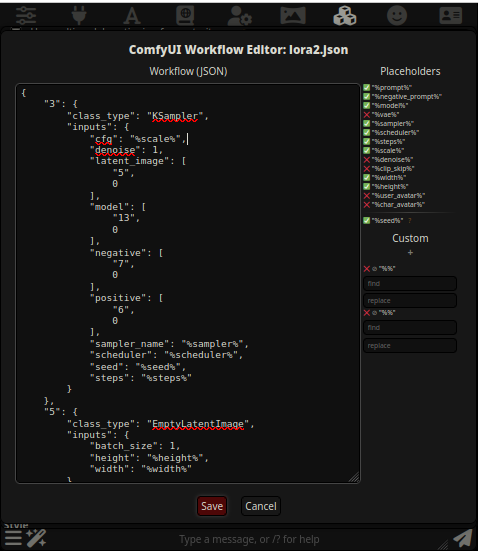

You will need to import this workflow into your SillyTavern Image Generation Settings page. You will have to create a new workflow and manually copy and paste the contents of the json.

This works by using the Lora Text Extractor node and setting it’s input as the SillyTavern prompt variable. Any loras in the prompt sent from SIllyTavern will be parsed from the prompt and used for generation. The workflow also uses the Inspire Shared Model Loader which enables model caching for rapid model switching.